How to save on cloud costs with workload management – Part 3: Learn from past mistakes

Most tools that provide insight into cloud cost reduction focus on what you can do now based on what is currently running in your cloud. That makes sense.

However, you also want to consider what you could have done differently in the past to avoid repeatedly paying more for short lived instances. If you’re making effective use of a cloud environment, then instances will run only when they are required.

As application volumes increase, demand will be distributed among instances and, when threshold rules are breached, new instances will spin up to serve that demand and then be terminated when no longer required.

The transient nature of these machines makes them difficult to analyse. In Azure Scale Sets or AWS (Amazon Web Services) Auto-scaling groups, each instance will have a pre-defined configuration and rules will be in place to automatically spin up machines at certain demand levels and times of day.

If these machines have the wrong configuration, then you could be incurring a significant bill for running large numbers of short-term machines that are not optimised, and it is difficult to see what’s really going on using the providers’ tools. The auto-scaling rules may be too aggressive, resulting in too many unoptimised machines running.

If this is the case, it could result in your organisation buying reservations or savings plans that are not needed and you’d be locking yourself into a sub-optimal configuration by committing to significant spend before optimising.

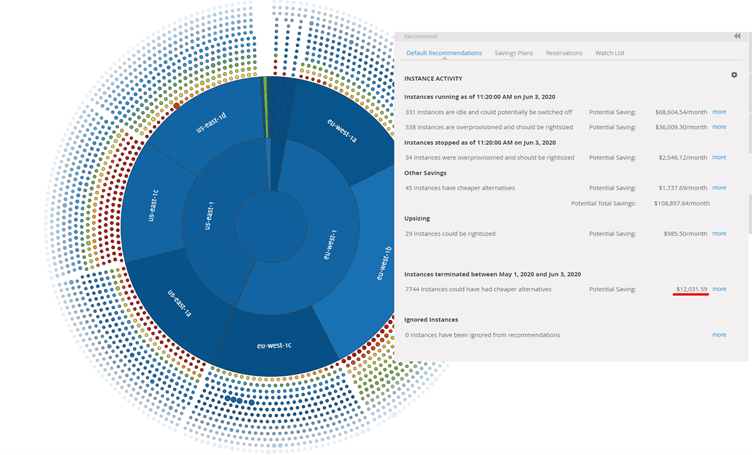

Continual analysis of auto scaling applications can determine if applications can be run more cost effectively by changing the profile of auto start up instances. Capacity Planner provides this insight not only by recommending changes that can be made to running instances, but changes that could have been made to terminated instances. This often requires detailed analysis across thousands of instances or machines over a prolonged period.

Could it have been cheaper?

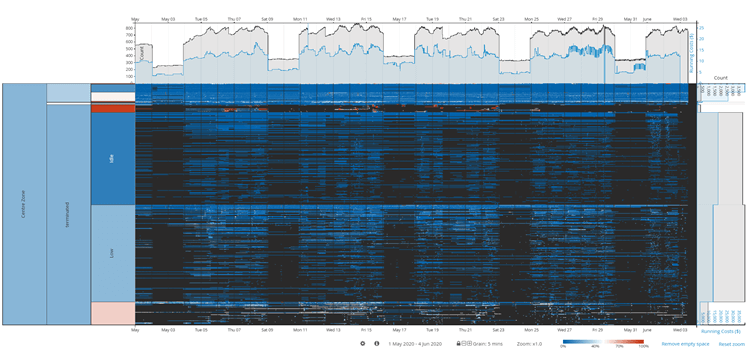

If you recall our previous blog posts where we wrote about idle time management and optimising cloud costs through right-sizing and right-buying, the timeburst visualisation shows a heatmap of utilisation for all instances over a period of time, regardless of whether they are running or not. With this visualisation, you can view short lived instances and their activity levels. This allows you to determine if there were cheaper alternatives to how things have been run in the past.

The timeburst visualisation below shows five-minute CPU (central processing units) utilisation data for every instance in this environment run during the month of May. In that month, a total 9940 instances were run, of which 9059 were terminated.

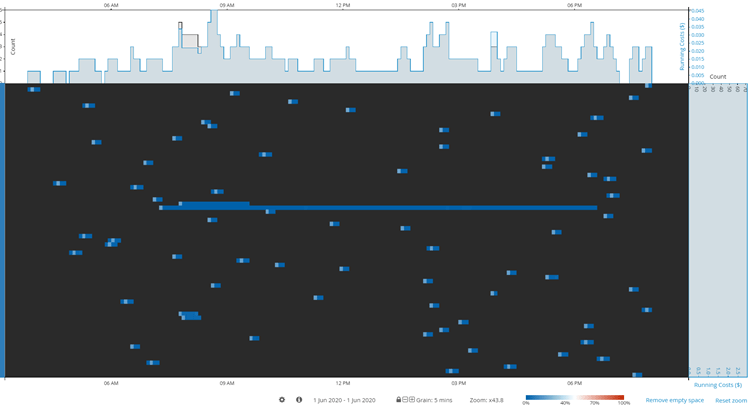

We can zoom in to gain insight at a very high level of detail. In this example, we can see a collection of terminated machines on a single day in June in one part of the estate, and all but two of these machines have run for noticeably short periods of time – some for as little as 10 minutes. This being a view of peak CPU activity, you can see that very few of these short running machines have used their allocated resources.

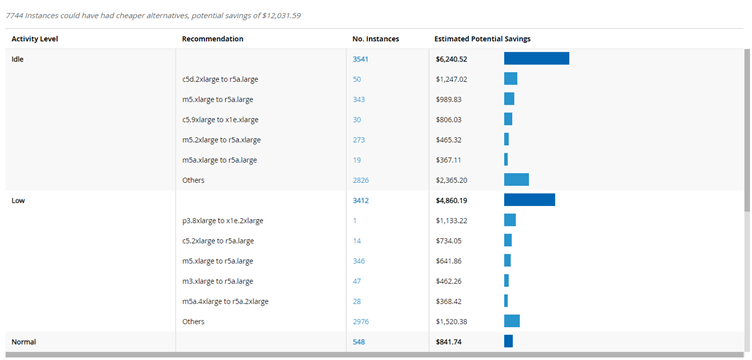

Of those 9059 machines that are now terminated, 7744 of them had cheaper alternatives. If those machines had been run differently, $12,031 could’ve been saved in on-demand costs.

This chart then breaks down those alternatives into changes that could have made the biggest impact to costs. You can see that 50 short lived c5d.2xlarge machines had such low levels of activity that they were considered to be idle. If they had been run as r5a.nano machines, this could’ve resulted in on-demand savings of $1247. At the least, they could’ve been run as c5d.large and pend in this area could’ve been reduced by 75%.

Once you’ve identified these opportunities, the challenge will be convincing the engineering or operations team to change their auto-scaling rules to use different instance types. Depending on the application running, it may not be suitable to change from compute optimised to general purpose, or from Intel to AMD or Gravitron2.

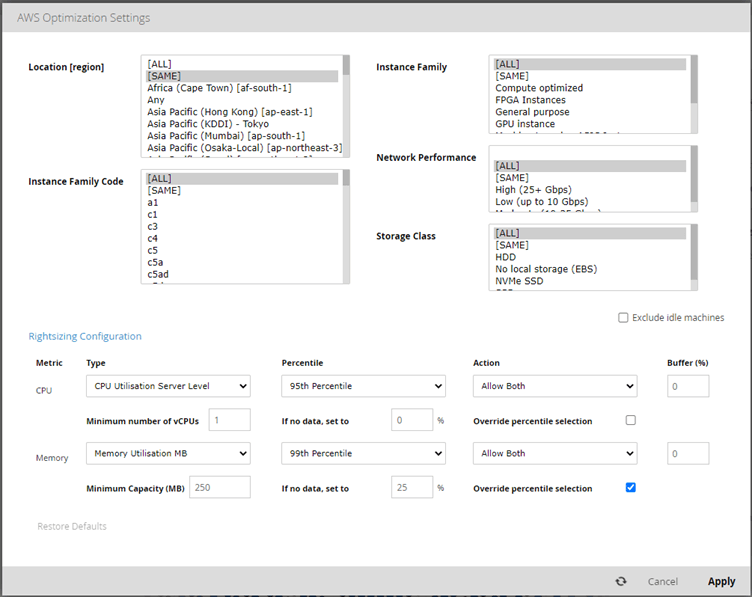

Capacity Planner allows you to configure recommendations using several levers, including maintaining network and storage performance and family type. This can be done across the entire estate or at an individual instance level for a deep dive.

Choose your metrics wisely

You can also choose the individual metrics and measures to use. Perhaps it’s important to use peak CPU or 99th percentile CPU. Does your workload need a very particular peak capacity to cope with short lived high demand or does a less sensitive measure provide a better picture of what the machine is doing during most of its operational life. While most cloud cost optimisation tools will use either average or peak, Capacity Planner provides a more nuanced approach by giving you a full range of statistical measures to use for every workload, but also the ability to select the time frame on which to base those measures. This could be a three-month window, a chosen month, or a chosen week or day.

If you are looking to right-size within a scale set, it may be that the family has been chosen for a reason - perhaps compute optimised is preferred over general purpose and there may be a reason the software requires a minimum number of cores. Again, this can be configured. Also, right-sizing accurately within a family type can be challenging when there is no visibility for memory utilisation.

Capacity Planner supports the integration of memory from other monitoring tools. However, should this not be available, you can instruct the rightsizing capability to allow memory to be reduced by any percentage of your choosing. Adjusting these settings, even if restricted to staying with a particular family of instances, can still yield significant potential savings.

This insight allows you to configure your auto-scaling or scale-set configuration and answer some crucial questions more accurately. Are the instance types appropriate for your workload? Are the utilisation levels at which additional machines are instantiated configured optimally? Are you running machines when the demand profile indicates that there is no need to? Capacity Planner empowers you to suggest policy changes for auto-scaling applications that can lead to significant cost savings and provide the evidence needed to enable the change.